第7章 Pod控制器

1.控制器作用

1.pod类型的资源,删除pod后,不会重建

2.替用户监视并保证相应的节点上始终有用户所期望的副本数量的pod在运行

3.如果所运行的pod副本数超过了用户期望的,那么控制器就会删掉,直到和用户期望的一致

4.如果所运行的pod副本数低于用户期望的,那么控制器就会创建,直到和用户期望的一致

2.常用控制器类型

ReplicaSet RS:

按用户期望的副本创建pod,并始终保持相应数量副本

Deployment:

Deployment通过控制RS来保证POD始终保持相应的数量副本

支持滚动更新,回滚,回滚默认保留10个版本

提供声明式配置,支持动态修改

管理无状态应用最理想的控制器

node节点可能会运行0个或多个POD

DeamonSet:

一个节点只运行一个,必须是始终运行的状态

StatefulSet:

有状态应用

3.ReplicaSet控制器

https://kubernetes.io/zh/docs/concepts/workloads/controllers/replicaset/

ReplicaSet 的目的是维护一组在任何时候都处于运行状态的 Pod 副本的稳定集合。 因此,它通常用来保证给定数量的、完全相同的 Pod 的可用性。

1)编写RS控制器资源配置清单

apiVersion: apps/v1 #接口版本号

kind: ReplicaSet #资源类型 ReplicaSet

metadata: #RS的原数据

name: nginx-rs #RS原数据名称

labels: #RS原数据标签

app: nginx-rs #RS具体标签

spec: #定义pod的实际运行配置

replicas: 2 #要运行几个Pod

selector: #选择器

matchLabels: #匹配标签

app: nginx-rs #匹配Pod的标签

template: #创建的Pod的配置模板

metadata: #pod自己的原数据

labels: #pod自己的标签

app: nginx-rs #pod具体标签名

spec: #定义容器运行的配置

containers: #容器参数

- name: nginx-rs #容器名

image: nginx #容器镜像

imagePullPolicy: IfNotPresent #镜像拉取策略

ports: #暴露端口

- name: http #端口说明

containerPort: 80 #容器暴露的端口

2)应用RS资源配置清单

kubectl create -f nginx-rs.yaml

3)查看RS资源

kubectl get rs

kubectl get pod -o wide

4)修改yaml文件应用修改

vim nginx-rs.yaml

kubectl apply -f nginx-rs.yaml

5)动态修改配置 扩容 收缩 升级

kubectl edit rs nginx

kubectl scale rs nginx --replicas=5

6)如何删除rs控制器创建的Pod?

现象:不能直接删除rs创建的pod,因为删除后rs还会在创建

结论:所以正确的操作是要直接删除rs控制器,这样rs控制器会把创建的所有pod一起删除

方法1:直接从配置文件删除

kubectl delete -f nginx-rs.yaml

方法2:直接使用命令删除

kubectl delete rs nginx-rs

4.Deployment控制器

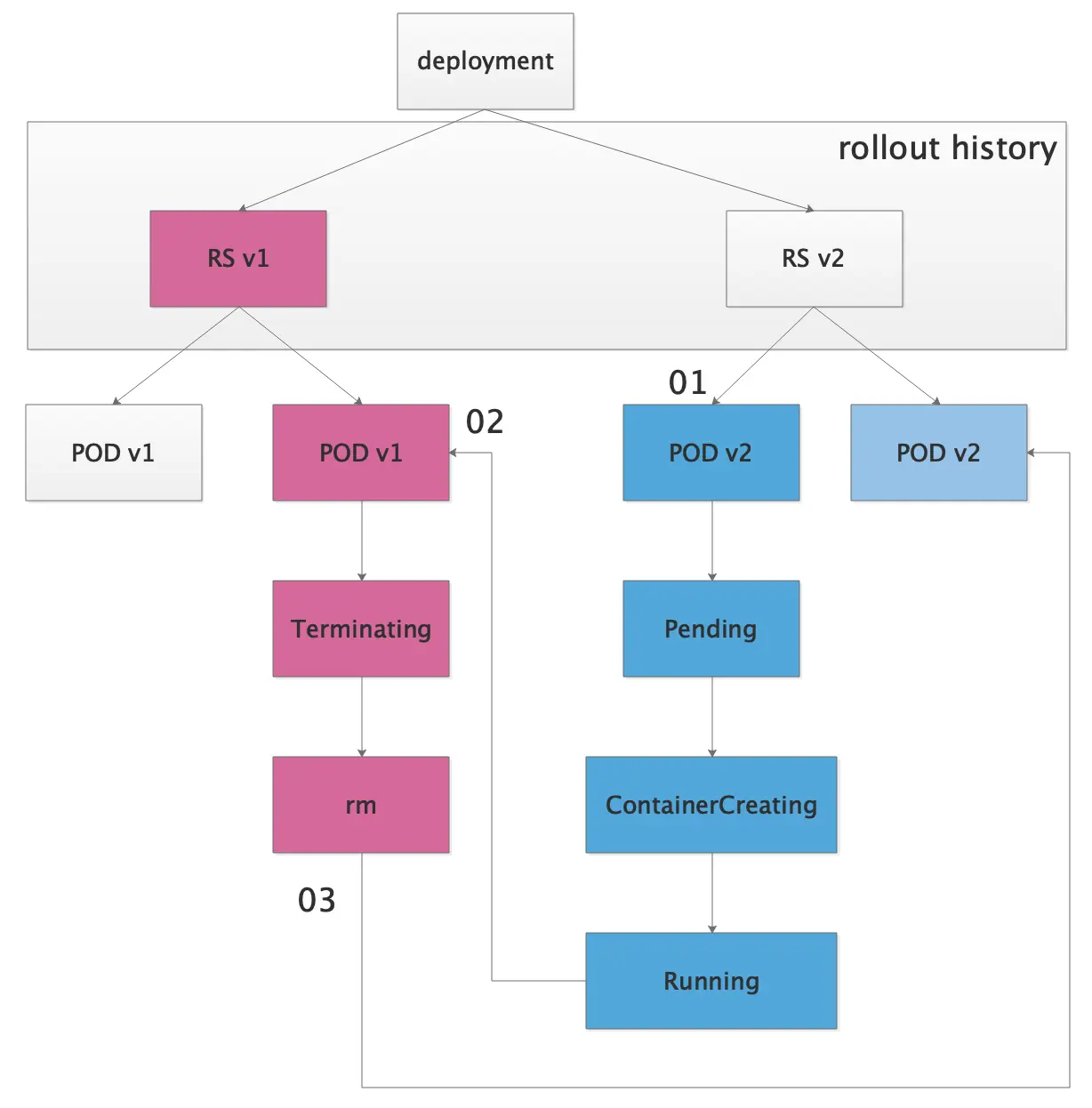

1)Deployment和RS控制器的关系

Deployment创建ReplicaSet

由ReplicaSet创建并控制Pod使用保持期望数量在运行

更新版本时Deployment创建新版本的ReplicaSet实现Pod的轮询升级

2)资源配置清单

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-dp

name: nginx-dp

spec:

replicas: 3

selector:

matchLabels:

app: nginx-dp

template:

metadata:

labels:

app: nginx-dp

name: nginx-dp

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-dp

3)应用资源配置清单

kubectl create -f nginx-dp.yaml

4)查看dp资源信息

kubectl get pod -o wide

kubectl get deployments.apps

kubectl describe deployments.apps nginx-dp

5)更新版本

方法1: 命令行根据资源配置清单修改镜像

kubectl set image -f nginx-dp.yaml nginx-containers=nginx:1.24.0

查看有没有更新

kubectl get pod

kubectl describe deployments.apps nginx-dp

kubectl describe pod nginx-dp-7c596b4d95-6ztld

方法2: 命令行根据资源类型修改镜像打开2个窗口:第一个窗口监控pod状态

kubectl get pod -w

第二个窗口更新操作

kubectl set image deployment nginx-dp nginx-dp=nginx:1.24

kubectl set image deployment nginx-dp nginx-dp=nginx:1.25

查看更新后的deployment信息

kubectl describe deployments.apps nginx-dp

----------------------------------------------------

Normal ScalingReplicaSet 14m deployment-controller Scaled up replica set nginx-dp-7c596b4d95 to 1

Normal ScalingReplicaSet 14m deployment-controller Scaled down replica set nginx-dp-9c74bb6c7 to 1

Normal ScalingReplicaSet 14m deployment-controller Scaled up replica set nginx-dp-7c596b4d95 to 2

Normal ScalingReplicaSet 13m deployment-controller Scaled down replica set nginx-dp-9c74bb6c7 to 0

Normal ScalingReplicaSet 8m30s deployment-controller Scaled up replica set nginx-dp-9c74bb6c7 to 1

Normal ScalingReplicaSet 8m29s (x2 over 32m) deployment-controller Scaled up replica set nginx-dp-9c74bb6c7 to 2

Normal ScalingReplicaSet 8m29s deployment-controller Scaled down replica set nginx-dp-7c596b4d95 to 1

Normal ScalingReplicaSet 8m28s deployment-controller Scaled down replica set nginx-dp-7c596b4d95 to 0

更新过程:

nginx-dp-7c596b4d95-8z7kf #老的版本

nginx-dp-7c596b4d95-6ztld #老的版本

nginx-dp-9c74bb6c7-pgfxz 0/1 Pending

nginx-dp-9c74bb6c7-pgfxz 0/1 Pending

nginx-dp-9c74bb6c7-pgfxz 0/1 ContainerCreating #拉取新版本镜像

nginx-dp-9c74bb6c7-pgfxz 1/1 Running #运行新POD

nginx-dp-7c596b4d95-8z7kf 1/1 Terminating #停止一个旧的POD

nginx-dp-9c74bb6c7-h7mk2 0/1 Pending

nginx-dp-9c74bb6c7-h7mk2 0/1 Pending

nginx-dp-9c74bb6c7-h7mk2 0/1 ContainerCreating #拉取新版本镜像

nginx-dp-9c74bb6c7-h7mk2 1/1 Running #运行新POD

nginx-dp-7c596b4d95-6ztld 1/1 Terminating #停止一个旧的POD

nginx-dp-7c596b4d95-8z7kf 0/1 Terminating #等待旧的POD结束

nginx-dp-7c596b4d95-6ztld 0/1 Terminating #等待旧的POD结束

查看滚动更新状态:

kubectl rollout status deployment nginx-dp

滚动更新示意图:

6)回滚上一个版本

kubectl describe deployments.apps nginx-dp

kubectl rollout undo deployment nginx-dp

kubectl describe deployments.apps nginx-dp

7)回滚到指定版本

v1 1.14.0 v2 1.15.0 v3 3.333.3回滚到v1版本

创建第一版 1.24.0

kubectl create -f nginx-dp.yaml --record

更新第二版 1.25.0

kubectl set image deployment nginx-dp nginx-dp=nginx:1.25.0

更新第三版 1.99.0(故意写错)

kubectl set image deployment nginx-dp nginx-dp=nginx:1.99.0

查看所有历史版本

kubectl rollout history deployment nginx-dp

查看指定历史版本信息

kubectl rollout history deployment nginx-dp --revision=1

回滚到指定版本

kubectl rollout undo deployment nginx-dp --to-revision=1

8)扩缩容

kubectl scale deployment nginx-dp --replicas=5

kubectl scale deployment nginx-dp --replicas=2

总结:

动态修改镜像版本

kubectl set image deployment nginx-dp nginx-dp=nginx:1.24

kubectl set image deployment nginx-dp nginx-dp=nginx:1.25

查看所有历史版本

kubectl rollout history deployment nginx-dp

查看指定历史版本号

kubectl rollout history deployment nginx-dp --revision=2

回滚上一次

kubectl rollout undo deployment nginx-dp

回滚到指定版本

kubectl rollout undo deployment nginx-dp --to-revision=1

扩/缩容

kubectl scale deployment/nginx-dp --replicas=10

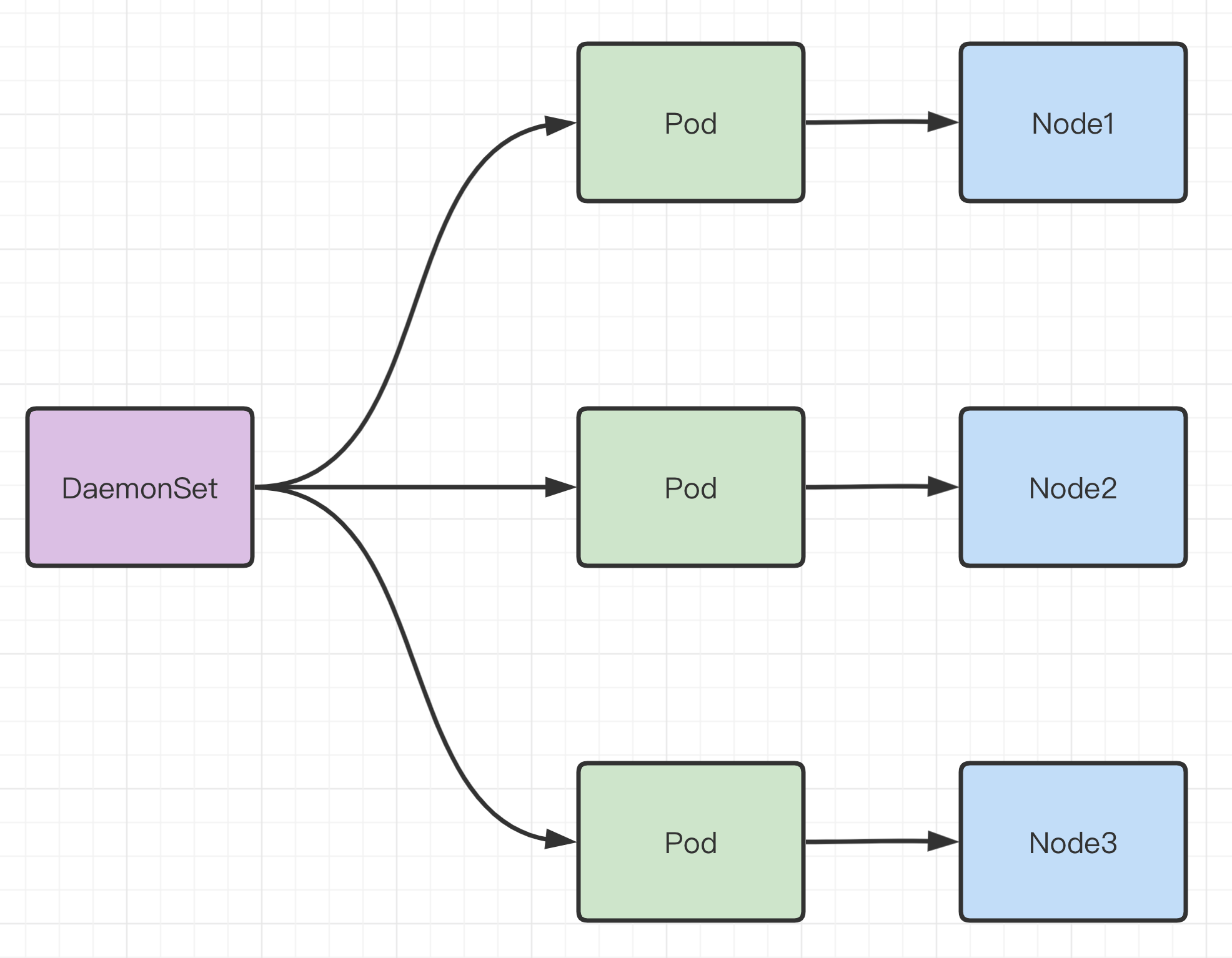

5.DaemonSet控制器

1) DaemonSet类型介绍

DaemonSet 确保全部(或者某些)节点上运行一个 Pod 的副本。 当有节点加入集群时, 也会为他们新增一个 Pod 。 当有节点从集群移除时,这些 Pod 也会被回收。删除 DaemonSet 将会删除它创建的所有 Pod。

简单来说就是每个节点只部署一个POD。

常见的应用场景:

监控容器

日志收集容器

2) DaemonSet举例

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ds

labels:

app: nginx-ds

spec:

selector:

matchLabels:

app: nginx-ds

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: nginx-ds

image: nginx:1.16

ports:

- containerPort: 80

6.StatefulSet控制器

https://kubernetes.io/zh-cn/docs/concepts/workloads/controllers/statefulset/

https://kubernetes.io/zh-cn/docs/concepts/services-networking/service/#headless-services

https://kubernetes.io/zh-cn/docs/tutorials/stateful-application/basic-stateful-set/

https://blog.nashtechglobal.com/how-to-set-up-a-2-node-elasticsearch-cluster-on-kubernetes-minikube/

https://www.processon.com/view/link/66e155c15b0abf01932963a4?cid=66e0f355df5e372d74ea06b9

1)有状态和无状态服务介绍

无状态服务:

应用之间不需要通过IP通讯,比如部署多台Nginx,但是每个Nginx之间不需要通过IP通讯

有状态服务:

应用之间需要通过固定的IP通讯,如果IP地址改变了,应用状态就会不正常,比如MySQL的主从复制,Redis主从复制,ES集群,mongo副本集

2)StatefulSet介绍

StatefulSet类似于前面我们学的Replica

3)Headless Service

正常流程:

域名 --> ClsterIP --> Pod

Headless Service:

域名 --> Pod

4)定义Headless Service

cat > nginx-svc.yaml << 'EOF'

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: default

labels:

app: nginx

spec:

selector:

app: nginx

ports:

- name: http

port: 80

clusterIP: None

EOF

唯一的区别就是 clusterIP: None

5)StatefulSet

创建pv:

cat > nginx-pv.yaml << 'EOF'

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /tmp/pv01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /tmp/pv02

EOF

创建资源配置清单:

cat > nginx-sf.yaml << 'EOF'

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

namespace: default

spec:

serviceName: "nginx"

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- name: web

containerPort: 80

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

EOF

部署ES集群

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-pv1

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

local:

path: /data/es

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node-01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-pv2

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

local:

path: /data/es

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node-02

---

apiVersion: v1

kind: Service

metadata:

name: es

labels:

app: es

spec:

ports:

- port: 9200

name: web

clusterIP: None

selector:

app: es

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es

spec:

selector:

matchLabels:

app: es

serviceName: "es"

replicas: 2

template:

metadata:

labels:

app: es

spec:

imagePullSecrets:

- name: harbor-secret

containers:

- name: es

image: luffy.com/base/elasticsearch:7.9.1

imagePullPolicy: IfNotPresent

env:

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.name

value: es-k8s

- name: discovery.seed_hosts

value: "es-0.es,es-1.es"

- name: cluster.initial_master_nodes

value: "es-0,es-1"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

ports:

- containerPort: 9200

name: web

- containerPort: 9300

name: cluster

volumeMounts:

- name: es-data

mountPath: /usr/share/elasticsearch/data

volumeClaimTemplates:

- metadata:

name: es-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

storageClassName: local-storage

---

apiVersion: v1

kind: Service

metadata:

name: es-svc

labels:

app: es

spec:

ports:

- port: 9200

name: web

targetPort: 9200

selector:

app: es

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: es-ingress

spec:

ingressClassName: nginx

rules:

- host: k8s.es.com

http:

paths:

- path: /

pathType: ImplementationSpecific

backend:

service:

name: es-svc

port:

number: 9200

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es

spec:

selector:

matchLabels:

app: es

serviceName: "es"

replicas: 2

template:

metadata:

labels:

app: es

spec:

imagePullSecrets:

- name: harbor-secret

containers:

- name: es

image: luffy.com/base/elasticsearch:7.9.1

imagePullPolicy: IfNotPresent

env:

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.name

value: es-k8s

- name: discovery.seed_hosts

value: "es-0.es,es-1.es"

- name: cluster.initial_master_nodes

value: "es-0,es-1"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

ports:

- containerPort: 9200

name: web

- containerPort: 9300

name: cluster

volumeMounts:

- name: es-data

mountPath: /usr/share/elasticsearch/data

volumeClaimTemplates:

- metadata:

name: es-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

storageClassName: local-storage

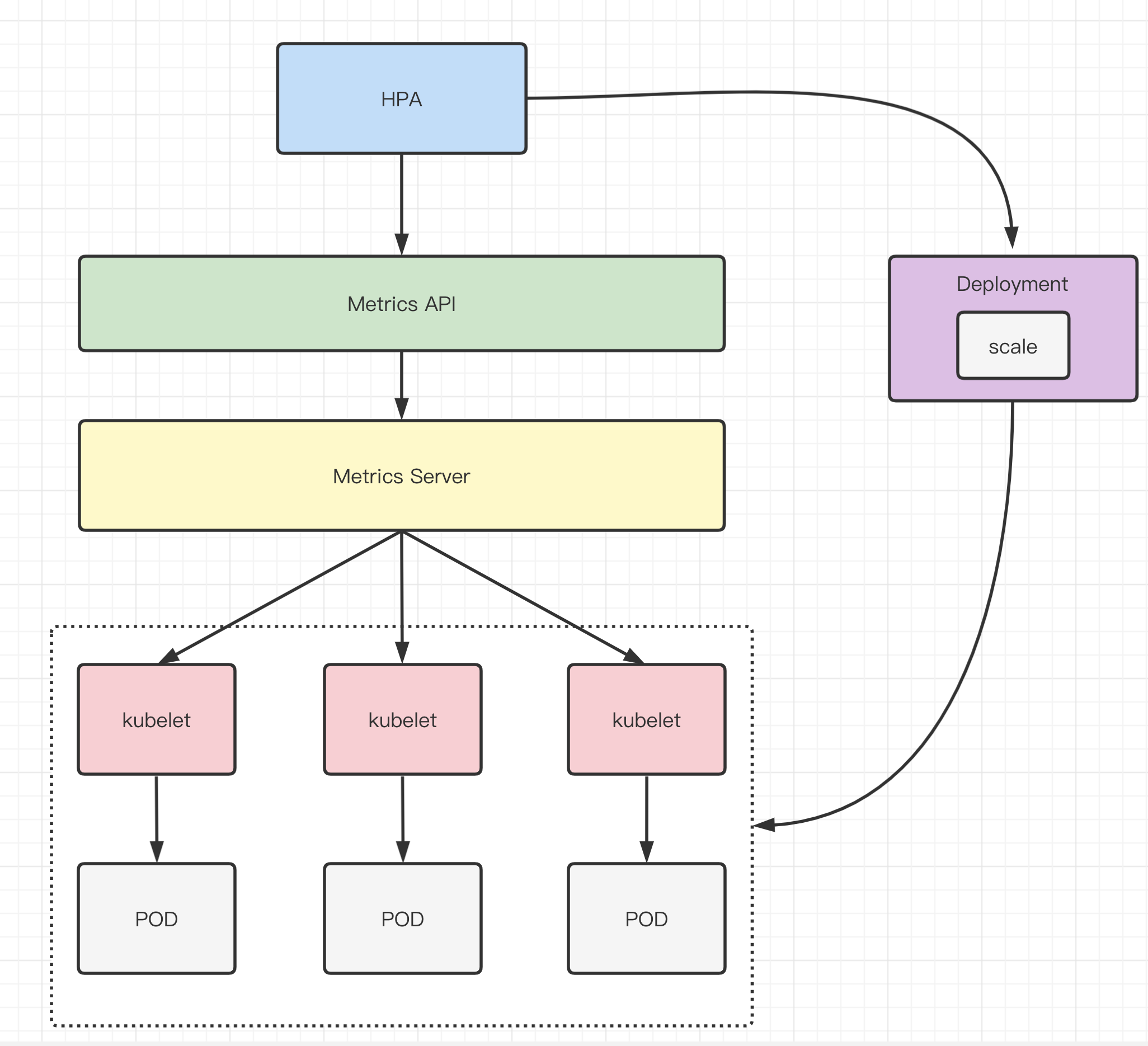

7.Pod 水平自动扩缩 HAP

1)HPA介绍

官网地址:

https://kubernetes.io/zh/docs/tasks/run-application/horizontal-pod-autoscale-walkthrough/

HPA工作原理:

HAP通过收集来的监控指标分析所有Pod的负载情况,并且根据我们设定好的标准来自动扩容收缩ReplicationController、 Deployment、ReplicaSet 或 StatefulSet 中的 Pod 数量

2)Metrics Server介绍

在HAP早期版本使用的是一个叫Heapster组件来提供CPU和内存指标的,在后期的版本k8s转向了使用Metrcis Server组件来提供Pod的CPU和内存指标,Metrcis Server通过Metrics API将数据暴露出来,然后我们就可以使用k8s的API来获取相应的数据。

3)Metrics Server安装

下载项目yaml文件

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.0/components.yaml

由于默认的镜像是从google云下载的,所以需要一些手段先下载下来,然后再导入本地的节点中。

修改配置文件:

spec:

hostNetwork: true #使用host网络模式

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --kubelet-insecure-tls #跳过证书检查

image: metrics-server:v0.4.0 #修改为本地的镜像

创建资源:

kubectl apply -f components.yaml

查看结果:

[root@master ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 79m 7% 1794Mi 46%

node1 26m 2% 795Mi 20%

node2 23m 2% 785Mi 20%

4)生成测试镜像

创建测试首页

cat > index.php << 'EOF'

<?php

$x = 0.0001;

for ($i = 0; $i <= 1000000; $i++) {

$x += sqrt($x);

}

echo "OK!";

?>

EOF

创建dockerfile

cat > dockerfile << 'EOF'

FROM php:5-apache

ADD index.php /var/www/html/index.php

RUN chmod a+rx index.php

EOF

生成镜像

docker build -t php:v1 .

将镜像导出发送到其他节点:

docker save php:v1 > php.tar

scp php.tar 10.0.0.12:/opt/

scp php.tar 10.0.0.13:/opt/

导入镜像:

docker load < /opt/php.tar

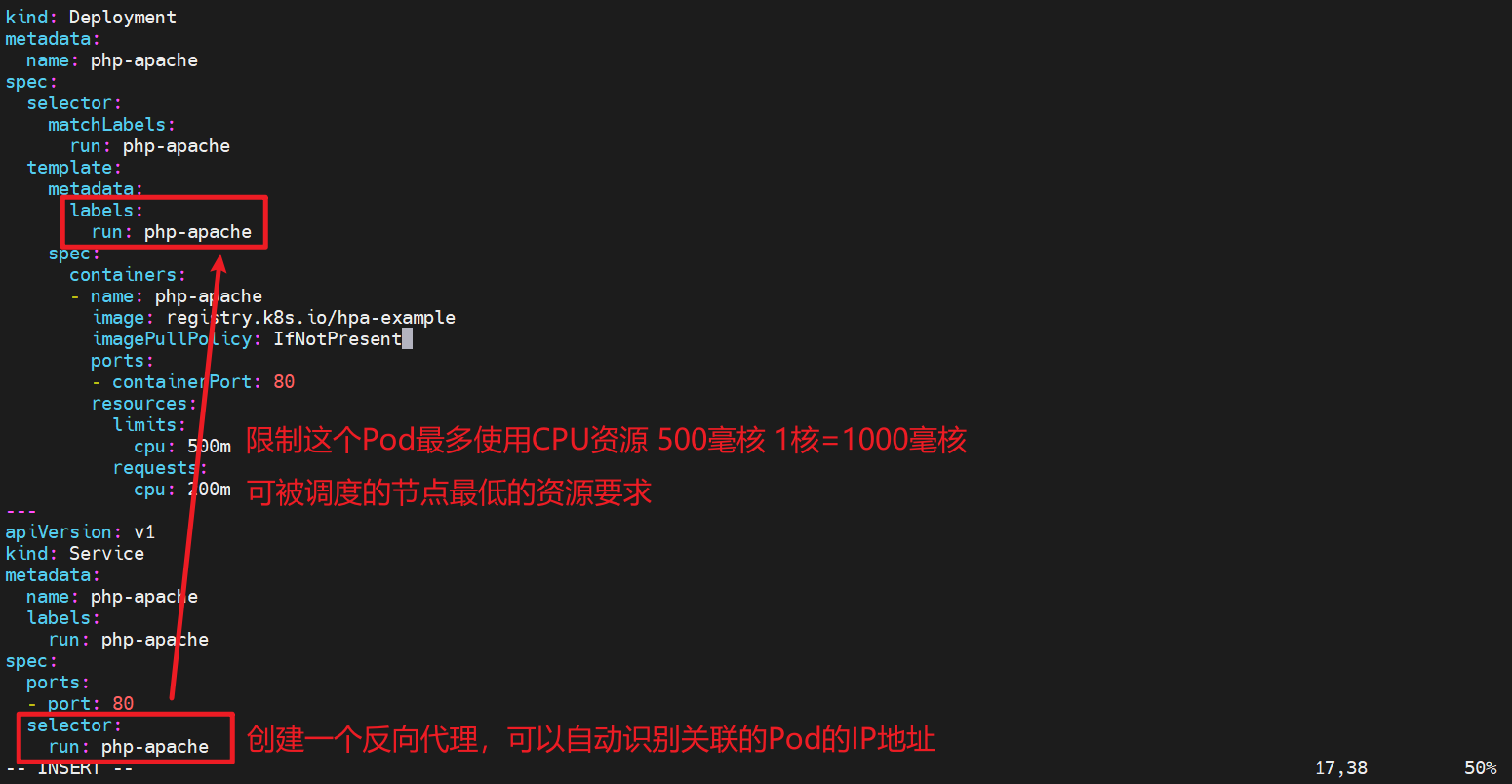

5)创建Deployment资源

cat >php-dp.yaml<< 'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

replicas: 1

selector:

matchLabels:

run: php-apache

template:

metadata:

labels:

run: php-apache

spec:

containers:

- image: php:v1

imagePullPolicy: IfNotPresent

name: php-apache

ports:

- containerPort: 80

protocol: TCP

resources:

requests:

cpu: 200m

EOF

6)创建HPA资源

cat > php-hpa.yaml <<EOF

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: php-apache

namespace: default

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

targetCPUUtilizationPercentage: 50

EOF

7)查看HPA扩所容情况

kubectl get hpa -w

kubectl get pod -w

8)压测

while true; do wget -q -O- http://10.2.1.18; done

9)简单创建命令

创建dp

kubectl run php-apache --image=php:v1 --requests=cpu=200m --expose --port=80

创建hpa

kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

10) 收缩

如果CPU使用率降下来了,k8s会默认5分钟后进行收缩

更新: 2024-09-12 09:55:36